Building HelpMe: An AI-Powered CLI Tool for Linux Automation

A deep dive into how I built a terminal assistant that translates natural language into executable Linux commands using advanced AI agents, complete with validation, safety checks, and multi-provider support.

The Problem: Why I Built HelpMe

Every developer has been there—staring at a terminal, desperately trying to remember the exact syntax for tar, ffmpeg, or some obscure find command. We end up Googling the same commands repeatedly, copying snippets from Stack Overflow, and hoping they work.

I realized this workflow was broken. What if the terminal could understand what I want to do, not just what I tell it to do?

That's when I decided to build HelpMe—a powerful terminal-based CLI tool that bridges the gap between natural language and Linux commands.

What is HelpMe?

HelpMe is a CLI tool designed to help you accomplish user-defined Linux tasks by automatically generating and executing step-by-step commands. The tool uses advanced AI Agents to intelligently generate, validate, and verify each command, ensuring accuracy, safety, and efficiency.

$ helpme "create a folder named 'myproject' with src and tests subdirectories"

Instead of you figuring out the exact commands, HelpMe:

- Understands your intent

- Generates the appropriate commands

- Validates them for safety

- Executes with your confirmation

Key Features

🤖 Automatic Plan Generation

Converts user instructions into clear, sequential commands for the Linux terminal. Complex multi-step tasks become simple one-liners.

✅ Intelligent Plan Verification

Each generated command is verified to ensure it aligns with the user's instructions and incorporates prior feedback before execution. This isn't just "generate and pray"—it's thoughtful, validated automation.

🔄 Flexible Execution Modes

Choose your preferred LLM provider:

- Gemini (Google's API)

- OpenAI (GPT models)

- DeepSeek (Cost-effective alternative)

- Ollama (Local, offline execution with Llama 3.1)

🛡️ Robust Error Handling

Errors during command execution are caught gracefully. The tool provides meaningful feedback without executing subsequent commands that might cause issues.

👤 Human-in-the-Loop Safety

Every command requires explicit confirmation before execution. No surprises, no accidental rm -rf /.

The Architecture

I refactored HelpMe into a clean, modular architecture that separates concerns and makes the codebase maintainable:

helpme/

├── core/ # Interfaces, Context, Exceptions

├── services/ # Business logic

│ ├── planner.py # Generates command plans

│ ├── validator.py # Validates commands

│ └── executor.py # Executes with safety checks

├── providers/ # LLM integrations

│ ├── gemini.py

│ ├── openai.py

│ ├── deepseek.py

│ └── ollama.py

├── ui/ # Console UI components

├── config/ # Configuration & constants

└── common/ # Shared utilities & prompts

Why This Architecture?

Separation of Concerns: Each module has a single responsibility. The planner doesn't know about execution; the executor doesn't care which LLM generated the plan.

Provider Abstraction: Adding a new LLM provider is as simple as implementing a single interface. The rest of the system doesn't need to change.

Testability: Each service can be tested in isolation with mocked dependencies.

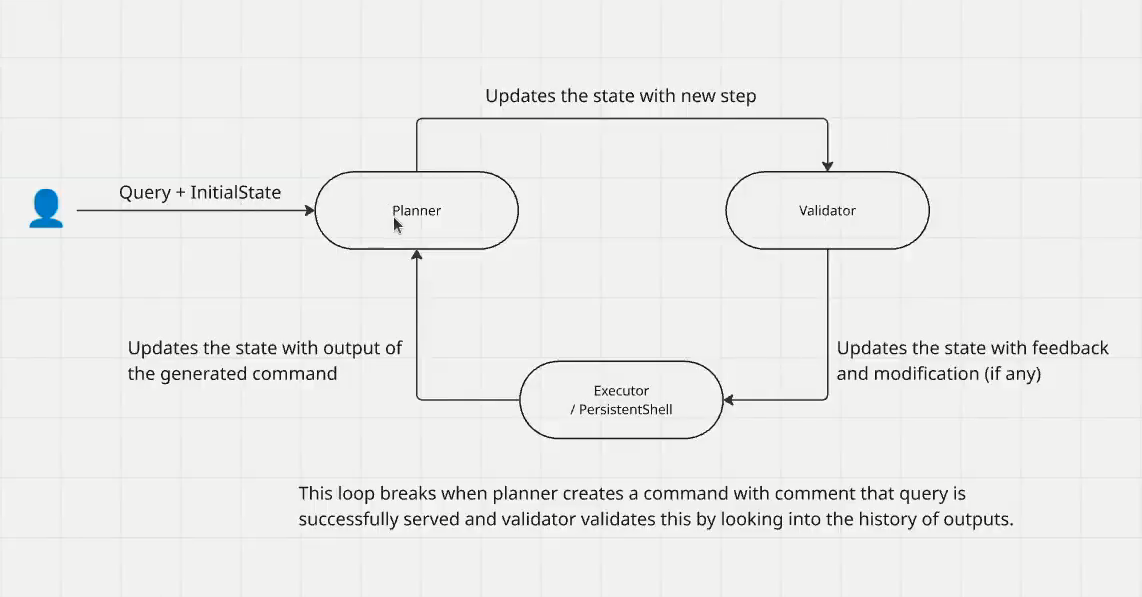

How It Works: The Execution Flow

Step 1: Input Processing

The user provides a natural language instruction:

helpme "find all Python files modified in the last 7 days"

Step 2: Context Construction

HelpMe gathers system context:

- Operating system details

- Current shell environment

- Working directory

- Available tools

Step 3: Plan Generation

The planner service sends the instruction + context to the selected LLM, which returns a structured plan:

{

"steps": [

{

"command": "find . -name '*.py' -mtime -7",

"description": "Find Python files modified in last 7 days"

}

]

}

Step 4: Validation

Before execution, the validator checks:

- Is this a destructive command?

- Does it match the user's intent?

- Are there any red flags?

Step 5: Human Confirmation

The command is presented to the user:

Suggested command: find . -name '*.py' -mtime -7

Execute? [Y/n]

Step 6: Execution

Only after explicit confirmation does the executor run the command, capturing output and errors.

Getting Started

Prerequisites

- Python 3.8 or higher

- API key for your preferred LLM provider (or Ollama for local execution)

Installation

# Clone the repository

git clone https://github.com/ASK-03/helpme

cd helpme

# Install dependencies

pip install -r requirements.txt

# Set up your API key

echo "GEMINI_API_KEY=your_key_here" > .env

Basic Usage

# Run with Python

python -m src.cli "your instruction here" --provider gemini

# Examples

python -m src.cli "list all running Docker containers" --provider gemini

python -m src.cli "compress all images in current folder" --provider openai

python -m src.cli "create a Python virtual environment" --provider ollama

Creating a Global Executable

For power users, you can create a standalone executable:

# Build with PyInstaller

pyinstaller --onefile src/cli.py --name helpme

# Move to system path

sudo cp dist/helpme /usr/bin/helpme

# Now use from anywhere!

helpme "your instruction" --provider gemini

Real-World Examples

Example 1: Complex File Operations

$ helpme "find duplicate images and move them to a 'duplicates' folder"

HelpMe generates a multi-step plan using fdupes or similar tools.

Example 2: System Administration

$ helpme "show me which process is using the most memory"

Returns cleaned up ps aux or top output.

Example 3: Development Tasks

$ helpme "set up a new React project with TypeScript and Tailwind"

Generates the complete npx create-react-app sequence with configurations.

Example Execution Walkthrough

Here is a real-world example of HelpMe in action, automating a multi-step git workflow:

User Input:

helpme "read the changes in the current git repo, make a meaningful commit message and commit the changes and push to origin" --provider gemini

HelpMe Output:

Model is planning next step...

Model is validating step...

✓ Step Check the current status of the git repository to identify changes. Summary:

Command: git status

Reason: This is the initial step to understand what changes are present in the repository. The output of 'git status' will show modified,

added, or deleted files, which is essential information for deciding which files to stage and for crafting a meaningful commit message in

subsequent steps. This command does not produce a very long output and is a standard first step in a git workflow.

Feedback: The `git status` command is a safe and essential read-only operation for understanding the current state of the Git repository. It

does not modify any files or system configurations, nor does it involve downloading or installing software. This command directly supports the

overall instruction to "read the changes in the current git repo" by providing the necessary information to proceed with staging and committing

changes. It adheres to all security rules.

Output:

On branch master

Your branch is up to date with 'origin/master'.

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: readme.md

modified: requirements.txt

modified: src/cli.py

modified: src/config.py

modified: src/executor.py

modified: src/llm.py

modified: src/planner.py

modified: src/validator.py

no changes added to commit (use "git add" and/or "git commit -a")

Status: Success (Code: 0)

Model is planning next step...

Model is validating step...

✓ Step Stage all identified changes in the repository to prepare for committing. Summary:

Command: git add .

Reason: Step 1 (`git status`) successfully identified several modified files that are not yet staged for commit. To proceed with the task of

committing these changes, they must first be staged. `git add .` is the standard command to stage all modified and new files in the current

directory and its subdirectories, directly addressing the output of the previous step and moving towards the commit goal. The output of this

command is usually minimal, indicating success or any issues, and does not produce a very long output.

Feedback: The command `git add .` is a standard and safe operation in Git workflows. It stages all modified and new files in the current

directory and its subdirectories, preparing them for the next commit. This step is a logical progression after `git status` has identified

changes and is essential for fulfilling the overall instruction to commit changes. It does not violate any security rules, as it does not

delete data, reboot systems, or download software. The command is appropriate for the described purpose.

Output:

No output

Status: Success (Code: 0)

Model is planning next step...

Model is validating step...

✓ Step Get a summary of the staged changes to help craft a meaningful commit message. Summary:

Command: git diff --staged --stat

Reason: After staging all changes in Step 2, it's crucial to understand the nature of these changes before committing. `git diff --staged

--stat` provides a concise summary of the modifications (files changed, lines added/deleted), which is essential for creating a meaningful and

accurate commit message as required by the task. This command avoids producing a very long output while still providing sufficient context. The

output will be used in the next step to formulate the commit message.

Feedback: The command `git diff --staged --stat` is safe and appropriate. It is a read-only command that provides a concise summary of staged

changes, which is essential for crafting a meaningful commit message as per the overall instruction. It does not violate any security rules,

nor does it modify, delete, or corrupt any data. The command is well-suited for its described purpose in the current execution context.

Output:

readme.md | 34 ++-----

requirements.txt | 5 +

src/cli.py | 47 +++++++--

src/config.py | 17 ++--

src/executor.py | 298 ++++++++++++++++++++++++++++++++++++++++---------------

src/llm.py | 2 +-

src/planner.py | 48 +++++----

src/validator.py | 46 +++++----

8 files changed, 331 insertions(+), 166 deletions(-)

Status: Success (Code: 0)

Model is planning next step...

Model is validating step...

✓ Step Commit the staged changes with a meaningful message summarizing the updates. Summary:

Command: git commit -m "Refactor: Enhance core CLI logic, update dependencies and documentation"

Reason: Step 3 (`git diff --staged --stat`) provided a summary of the staged changes, indicating modifications across several core source files

(cli.py, executor.py, llm.py, planner.py, validator.py), as well as readme.md and requirements.txt. This information is used to craft a concise

and meaningful commit message that reflects the nature of these updates. Committing the changes is a direct requirement of the task and follows

logically after staging and reviewing the changes. The output of this command will confirm the commit and will be used in the next step to

verify the commit was successful before pushing.

Feedback: The `git commit` command is safe and appropriate. It directly addresses the instruction to 'make a meaningful commit message and

commit the changes'. The commit message 'Refactor: Enhance core CLI logic, update dependencies and documentation' is well-crafted and reflects

the changes identified in the previous `git diff --staged --stat` command, which showed modifications to core CLI files, documentation, and

dependencies. This step is a logical progression after staging changes and reviewing them.

Output:

Refactor: Enhance core CLI logic, update dependencies and documentation

8 files changed, 331 insertions(+), 166 deletions(-)

Status: Success (Code: 0)

Model is planning next step...

Model is validating step...

✓ Step Push the committed changes to the remote 'origin' repository. Summary:

Command: git push origin master

Reason: Step 4 successfully committed the staged changes. The final requirement of the task is to 'push to origin'. This command will push the

local 'master' branch (as indicated by 'git status' in Step 1) to the 'origin' remote, thereby completing the entire task. The output will

confirm the success or failure of the push operation.

Feedback: The `git push origin master` command is appropriate and safe in this context. It directly fulfills the final requirement of the

overall instruction to 'push to origin' after the changes have been successfully committed in the previous step. This command does not violate

any security rules, as it's a standard operation for synchronizing local commits with a remote repository and does not involve data deletion,

corruption, or software installation.

Output:

To https://github.com/ASK-03/helpme.git

80c4c00..34b3d10 master -> master

Status: Success (Code: 0)

✅ Task Completed Successfully!

Challenges & Lessons Learned

Safety is Paramount

Allowing an AI to generate shell commands is inherently risky. I implemented multiple safety layers:

- Blocklist for dangerous patterns (

rm -rf /, etc.) - Confirmation for any write/delete operations

- Dry-run mode for testing

Latency Optimization

For a CLI tool, speed is critical. Nobody wants to wait 10 seconds for a simple command. I optimized:

- Prompt engineering to reduce token usage

- Streaming responses where supported

- Local caching for common patterns

Average response time: under 2 seconds on Gemini.

Provider Differences

Each LLM has quirks. GPT-4 is verbose. Gemini is fast. Llama is great offline. I built a normalization layer to handle these differences transparently.

What's Next?

I'm actively working on:

- Shell integration: Zsh/Bash plugins for seamless integration

- Command history learning: Personalized suggestions based on your patterns

- Multi-turn conversations: Follow-up refinements to commands

- Browser automation: Extending beyond the terminal

Conclusion

HelpMe has transformed my daily workflow. The terminal is no longer a memory test—it's a powerful canvas where I describe what I want, and it happens.

The source code is open source and available on GitHub. Contributions are welcome!

What repetitive commands would you automate with HelpMe? Let me know on X/Twitter!

Written by Abhishek Singh Kushwaha